Very few marketplaces and classifieds have content moderation systems that are prepared for growth because they often underestimate how quickly they might scale.

Scaling can happen in a number of ways:

• You launched a new feature that made it easier for people to list new products

• You expanded into a new geography that led to a massive uptick in new users

• That recent marketing campaign went viral and crashed your servers with new visitors

All of the above would require having a robust infrastructure in place to deal with the new growth challenges that come along with an increase in user-generated content. We all know what people on the internet are capable of posting. The quality of your marketplace is directly linked to the quality of the content on it.

When it comes to marketplace moderation systems, you might’ve made the choice of playing it safe by being risk-averse and following the age-old adage – “If it’s not broken, don’t fix it”.

But is this the right approach when readying your company for the future?

You can easily balance one foot on a single brick.

But does that balance hold if it’s five bricks stacked on top of one another?

We often hear marketplaces and classifieds tell us “Our systems are fine. They’re not the best and can we do better? Yes. But, they’re ok right now and not the biggest priority”. It’s understandable why some marketplace executives find merit in this approach of staying the course.

You invest in something because it helps you a) increase revenue, b) keep you out of jail, and c) cut costs, in that order.

So priorities usually defer to things that directly impact revenue, like marketing. Having said that, we know how bad quality content of any kind on your site can result in loss of user trust, churn, and revenue. In fact, we’ve seen cases where poorly moderated SKUs ended up in Google and Facebook ads, triggering an ad ban for the marketplace for promoting prohibited content.

Safety in a marketplace or a classified site is the single biggest differentiator because the giants like Facebook, Amazon, and Google have entered this business and are bringing in their war machine with them.

With little differentiation on inventory across platforms and the fact that the big guys have sophisticated systems to deal with this issue, the best A.I talent in the world, and all the data they need to power content moderation systems that work at unprecedented scales – the mid-market must do a lot of heavy-lifting to prevent from being out-competed.

One way of looking at it is:

Marketplaces need a dependable moderation system because content moderation, fraud, and overall trust and safety is a big deal. Every year marketplaces are losing users and money because of it.

In 2017, the total financial loss for ecommerce fraud victims amounted to $1.42 billion, just in the U.S. (Source – 2017 Internet Crime Report). According to Internet Crime Complaint Center’s report, in 2007, the overall financial loss resulting from incidents of C2C electronic auction marketplace fraud registered within the US alone amounted to more than $53.5 million USD, while the median of reported losses was $483.95 USD per complaint.

A survey conducted during February-March 2018 by the International Classifieds and Marketplaces Association (ICMA), singled out fraud and disruptive business models (like Google and Facebook) as the biggest threats to the classifieds industry.

As a marketplace operator, do you feel confident that your current content moderation system is competent enough to serve you for the next 5-10 years?

The struggle in maintaining trust in marketplaces

In 2008, a research study named “Investigating the impact of C2C electronic marketplace quality” surveyed 597 consumers to see what factors affected their overall trust in an online marketplace. In that research, they listed the different ‘buyer trust factor hypotheses’ that the survey data rejected or supported.

The research listed factors like Website appearance, Ease of use, and Ability to contact sellers, which they thought would affect buyer trust in marketplaces. But to their (and even our) surprise, they didn’t.

One of the hypotheses that the data supported was that “Seller trust positively influences a buyer’s attitude towards purchasing in a C2C electronic marketplace”, which is quite telling.

One of the biggest takeaways for marketplace and classified leaders is that trust takes years to build, but seconds to destroy. All those marketing dollars spent on user acquisition will be wasted if the content on the site is poor.

During online transactions, trust is very important because it connects to a basic human want: As people, all we want is a great experience.

The experience of getting exactly what we paid for.

The experience of not worrying about our safety.

The experience of being delighted.

And a lot of businesses have stumbled somewhere here.

They’ve given revenue and growth the front seat in an effort to execute their business vision, when in fact, revenue and growth should be the byproducts of delighted customers.

At Squad, we want to help our clients become more customer-centric.

The first step to becoming customer-centric is providing your users with a safer environment to conduct online purchases. This means fundamentally correcting the problem at the source; taking a proactive stance by stopping fraudulent listings before they go live in a marketplace.

In other words, giving marketplaces access to the best content moderation system, not just for today, but for the future as well.

Can A.I. safeguard user trust?

We can’t predict the future but given the rise of innovation in tech, there’s merit in believing that something completely new and game-changing will disrupt content moderation and Trust & Safety operations – especially when it’s related to artificial intelligence.

A few years ago A.I. was just available to a few big companies which had the advantage of having the intellectual resources and the infrastructure to deploy it. That has changed today.

Companies that integrate A.I. by the year 2020 are 4 times more likely to succeed than companies that do not.

(Gartner)

The challenge of content moderation

Traditionally, content was moderated using human moderators. Slowly, they found some patterns and created some static rules based on keywords to automatically filter out some of the content, letting humans handle the rest. The majority of marketplaces and classifieds are still using the aforementioned systems.

The fraudsters, however, got smarter. It’s easy to beat rule engines and human moderators are slow to react to things. This is when more sophisticated data science and machine learning started getting leveraged.

A.I is able to look for patterns within data and identify objects in images, allowing the rule engines to get smarter and better, automating up to 90% of moderation with really great accuracy. But, how hard is it to get there?

Let’s break this down the need for A.I. and humans to solve the problem together.

The case for A.I.

According to CB Insights, “over 55 private companies using A.I. across different industries have been acquired in 2017 alone. Google, Apple, Facebook, Intel, Microsoft, and Amazon have been the most active acquirers in A.I., with the majority of acquisitions falling in core A.I. technologies, such as image recognition and natural language processing.”

(CB Insights)

Clearly, A.I. is a competitive differentiator which can pave the way for market leadership.

There are off the shelf A.I. APIs that most companies think will power their moderation systems flawlessly right out of the gates once they’ve set them up. Something like this:

(Case 1 – Expectation: Humans moderate 10%, A.I. moderates 90%)

When in reality, it’ll be more like this:

(Case 2 – Reality: Humans moderate 90%, A.I. moderates 10%)

But getting to ‘Case 1’ is possible. It relies on the sanctity and reliability of data that businesses have accumulated because quality data is the required ingredient to train A.I. models accurately.

By 2020, companies that are digitally trustworthy will generate 20% more online profit than those that are not.

(Gartner)

One of the challenges companies face to develop custom A.I. models is the time it takes to collect and train data, simply because each company’s use case isn’t necessarily a one-size-fits-all situation. More than 60% of a data scientist’s time is spent in cleaning and labeling data, that is, if you can hire them in the first place!

Either that or you have access to billions of data points like the ones that Facebook and Google are sitting on (and yet their systems are far from perfect).

A.I. is a necessary competitive advantage but it needs to be trained continuously with controlled data that is powered by humans-in-the-loop.

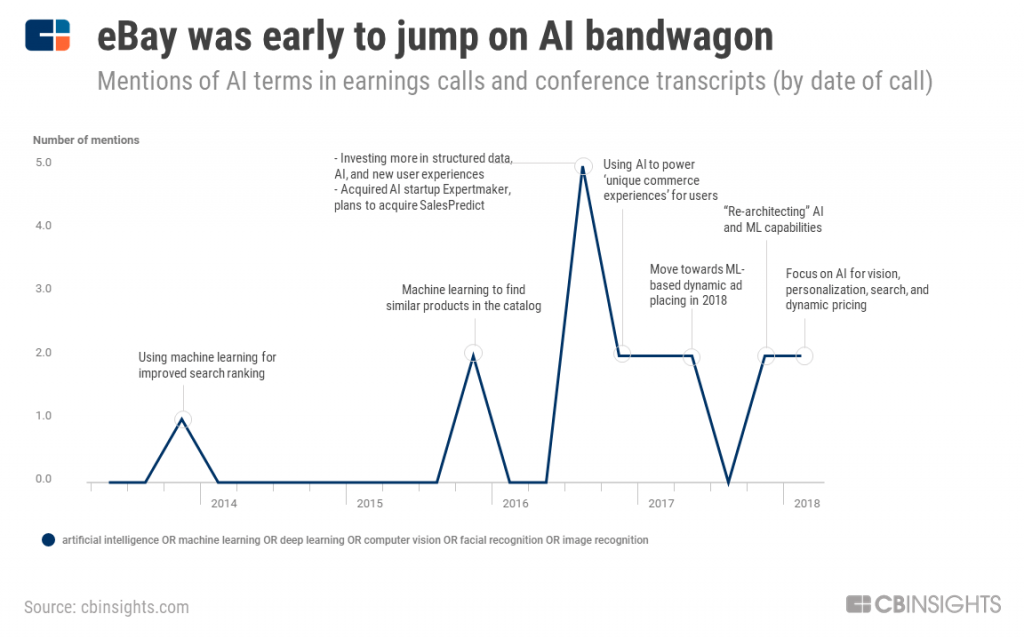

In C2C context, big players like Ebay already have a considerable head start in adopting A.I. to power ‘unique commerce experiences’ and personalization.

(CB Insights)

54% of business executives say A.I. solutions implemented in their businesses have already increased productivity.

(PWC)

If you’re someone who is on the fence about whether or not to invest in A.I. systems, here are three questions that McKinsey created to help you come to a decision sooner:

To summarize, A.I. is powerful, but putting it to work is a totally different ball game. There are all sorts of challenges such as finding the right engineering talent, to training the models with well-labeled data, integrating models with legacy infrastructure, and having a team to handle exceptions; the list goes on.

The case for Humans

Humans-in-the-loop are vital for building scalable A.I. models.

When it comes to moderating content for marketplaces, the biggest challenges with human moderation are:

1. A lack of experienced moderation specialists, and training people from scratch – giving them context of your marketplace policies, helping them build a thick skin, and make critical ‘accept/reject’ decisions quickly

2. Building interfaces for efficient and high-quality moderation – the speed at which you moderate is extremely critical because unmoderated content can receive more exposure than it should in no time

3. Data-and-analytics-driven feedback loops – to ensure quality, consistency, and to detect new problems

The above cycle is time-consuming and costly. It’s not just about training people but also setting up the infrastructure in-house. And at the end of the day, these processes can still result in a high employee turnover rate.

Having skilled human moderators is indispensable in today’s marketplace since they are perfect for subjective decision making and their judgments help give context and train the A.I. models.

For example, a set of kitchen knives might be categorized as a ‘weapon’ by an A.I. model but a human-in-the-loop would know better.

(A.I. shows medium confidence and therefore the listing is escalated for human review)

However, humans also bring with them their own set of limitations, the primary one is that productivity fundamentally deteriorates as the day progresses (even after taking naps).

Is it possible to counter this drop in productivity? Can ‘happiness’ counter the drudgery of boring and repetitive work? Maybe.

Harvard Business Review conducted a research on a Chinese call center over a period of 9 months. They found that workers who were given the choice to work-from-home were not only more happy but more productive than their colleagues in the office.

When the conductor of the research study, Professor Nicholas Bloom, was asked what he thought led to the increase in productivity, he replied – “One-third of the productivity increase, we think, was due to having a quieter environment, which makes it easier to process calls. At home, people don’t experience what we call the “cake in the break room” effect. Offices are actually incredibly distracting places. The other two-thirds can be attributed to the fact that the people at home worked more hours. They started earlier, took shorter breaks, and worked until the end of the day. They had no commute. They didn’t run errands at lunch. Sick days for employees working from home plummeted.”

Although it’s not a general conclusion that all work-from-home employees perform better; it should be noted that working from the comfort of anywhere, on your own schedule, and however many hours you want, collectively does offer the potential to positively affect productivity.

To summarize, building the need for human moderators will always prevail as user-generated content and fraud become more and more complicated. With the right kind of infrastructure, the work done by these moderators can be automated to a great extent and they can be made more efficient. Better yet, they can be distributed (or decentralized), making this process even more efficient and scalable.

Can content moderation power long-term profitability?

Organizations have at least 3 levers to increase profitability:

• They can acquire more paying users than they lose, or increase retention/decrease churn

• They can increase Average Revenue Per User (ARPU)

• They can cut costs

Out of the three levers above, it’s been proven time and again that retaining customers is often far cheaper and beneficial than acquiring newer ones, and returning customers tend to spend more. This is exactly what marketplaces should target first.

Here are the primary factors that can make marketplace operations better, and business more profitable:

1. Increase automation (to decrease human workload)

2. Decrease turnaround time (faster time to products going live on the site)

3. Increase conversions (a byproduct of a highly moderated catalog + user trust in the marketplace)

4. Cut costs (a byproduct of automation + more efficient operations)

Businesses care about the number of new customers per month. They’re also worried about increasing the lifetime value per customer.

The only way any business achieves this, is by ensuring their customers remain happy.

It’s easier said than done because for every negative experience that a customer has on your platform, they need many more (sometimes more than 10) positive experiences to make up for it (not forgetting that this can still result in users losing brand affinity).

How do you ensure customers remain happy?

By having a fanatical commitment to making sure that they have a safe environment to conduct their transactions in.

A reliable way of creating a safe environment for an open marketplace or classified can only be guaranteed by having a robust moderation system. One of the most successful approaches to accomplish that is wherein A.I. does the heavy-lifting and trained humans-in-the-loop provide the feedback to further optimize the A.I. model.

(An under-the-hood look at Squad’s moderation system. A perfect combination of Humans + A.I.)

Often times before we meet our clients, we’re pretty sure that the question they are asking themselves is – “Should we build or buy?”

Well, building A.I. systems is the preferred way to go if you have access to –

1. Talent: Hiring top-notch machine learning experts is a challenge. The average salary of a data scientist at Google is around $350,000 per year. And Tencent recently reported that there are around only 300,000 qualified A.I. engineers worldwide, while the demand is in the millions.

2. Infrastructure: Creating machine learning models also requires giving your team on-premise access to cutting-edge hardware and software. Most enterprise services that allow this infrastructure to be accessible through the cloud come at a hefty cost.

3. Data – Clean and reliable datasets are a prerequisite to train A.I. models. The more data that a model is exposed to, the better it’ll become at passing correct judgments.

But if you do NOT have access to anyone (or all) of these elements then it’s better to look for a partner who does.

Making marketplaces and classifieds profitable: Content moderation using Humans + A.I.

How do you create a near-perfect content moderation system that guarantees user safety?

Like most marketplaces and classifieds, do you outsource the automation to an A.I. vendor and the human moderation to a business process outsourcing (BPO) company? Or do you rely on your in-house team to do both?

The problem we’re effectively trying to solve is, how to best combine the speed and accuracy of A.I. systems with subjective decision-making capabilities of humans.

The answer lies in an integrated system (all data under one roof) that can facilitate seamless data sharing between humans and A.I.

Data has to flow uninterrupted between humans and machines, in a single system, to maintain data quality, accuracy, and sanity when feeding judgments to train the machine learning models. Since the easiest way to mess up an A.I. model is by exposing it to conflicting information, it will still give mostly right answers but its confidence level will go down, which:

1. defeats the purpose of automation, so

2. you’ll have to add another quality check layer by having a human resource double check the judgments, which in turn

3. slows down the speed at which the listing goes live on the marketplace

And this is where Squad comes in.

(The Squad platform)

Squad sits perfectly in between sellers and your platform, ingesting millions of unmoderated listings and converting them into moderated ones. We’re able to do this efficiently, at scale, by deploying the best of A.I. models, an experienced data science team, and a decentralized team of more than 100,000 trained humans-in-the-loop that perform work through Squad app interfaces.

By 2019, startups will overtake Amazon, Google, IBM, and Microsoft in driving the A.I. economy with disruptive business solutions.

(Gartner)

On an average Squad has been able to achieve the following metrics for its customers

1. Automated between 60 and 95% of their moderation volumes

2. Increased speed of moderation by 5x

3. Improved KPIs for threat detection by 2x

4. Created cost savings of 50-70%

As a result, our customers have also seen improvement in platform conversions by 5-10% by ensuring high-quality content on the platform. Other big areas of impact include a reduction in counterfeits, identifying scammers faster, and highlighting higher-quality listings.

These results have only been possible because:

1. We found the right recipe of Humans + A.I.,

2. Automation pays extreme dividends,

3. The distributed human workforce provides many cost benefits,

4. Our in-house teams are both brilliant and hungry to hunt for improvements day after day.

And even though we have a long road ahead of us, it’s helping businesses succeed that makes this journey worthwhile, every day.

Squad is reimagining how work gets done. We want to help our customers give each of their users the best and safest online buying experience.

Rishabh, Greg, Vanhishikha – Thank you for all the extensive inputs and suggestions.